Sunday, December 25, 2011

Software Design

Wednesday, October 5, 2011

Architecture of Integration Services

Of the components shown in the previous diagram, here are some important components to using Integration Services succesfully:

SSIS Designer

SSIS Designer is a graphical tool that you can use to create and maintain Integration Services packages. SSIS Designer is available in Business Intelligence Development Studio as part of an Integration Services project.

Runtime engine

The Integration Services runtime saves the layout of packages, runs packages, and provides support for logging, breakpoints, configuration, connections, and transactions.

Tasks and other executables

The Integration Services run-time executables are the package, containers, tasks, and event handlers that Integration Services includes. Run-time executables also include custom tasks that you develop.

Data Flow engine (also known as the pipeline) and Data Flow components

The Data Flow task encapsulates the data flow engine. The data flow engine provides the in-memory buffers that move data from source to destination, and calls the sources that extract data from files and relational databases. The data flow engine also manages the transformations that modify data, and the destinations that load data or make data available to other processes. Integration Services data flow components are the sources, transformations, and destinations that Integration Services includes. You can also include custom components in a data flow.

API or object model

The Integration Services object model includes managed application programming interfaces (API) for creating custom components for use in packages, or custom applications that create, load, run, and manage packages. Developer can write custom applications or custom tasks or transformations by using any common language runtime (CLR) compliant language.

Integration Services Service

The Integration Services service lets you use SQL Server Management Studio to monitor running Integration Services packages and to manage the storage of packages.

SQL Server Import and Export Wizard

The SQL Server Import and Export Wizard can copy data to and from any data source for which a managed .NET Framework data provider or a native OLE DB provider is available. This wizard also offers the simplest method to create an Integration Services package that copies data from a source to a destination.

Other tools, wizards, and command prompt utilities

Integration Services includes additional tools, wizards, and command prompt utilities for running and managing Integration Services packagesSQL Server 2008 Integration Services

SQL Server 2008 Integration Services

The traditional ETL-centric data integration from standard data sources continues to be at the heart of most data warehouses. However, demands to include more diverse data sources, regulatory requirements, and global and online operations are quickly transforming the traditional requirements for data integration. In this fast growing and changing landscape, the need to extract value from data and the need to be able to rely on it is more important than ever before. Effective data integration has become the basis of effective decision-making. SQL Server Integration Services provides a flexible, fast, and scalable architecture that enables effective data integration in current business environments.

how SQL Server Integration Services (SSIS) is an effective toolset for both the traditional demands of ETL operations, as well as for the evolving needs of general-purpose data integration. We will also discuss how SSIS is fundamentally different from the tools and solutions provided by major ETL vendors so it is ideally suited to address the changing demands of global business from the largest enterprise to the smallest business.

SSIS Architecture

Task flow and data flow engine

SSIS consists of both an operations-oriented task-flow engine as well as a scalable and fast data-flow engine. The data flow exists in the context of an overall task flow. The task-flow engine provides the runtime resource and operational support for the data-flow engine. This combination of task flow and data flow enables SSIS to be effective in traditional ETL or data warehouse (DW) scenarios as well as in many other extended scenarios such as data center operations. In this paper we will mainly focus on the data-flow related scenarios. The use of SSIS for data center oriented workflow is a separate topic by itself.

Pipeline architecture

At the core of SSIS is the data transformation pipeline. This pipeline has a buffer-oriented architecture that is extremely fast at manipulating rowsets of data once they have been loaded into memory. The approach is to perform all data transformation steps of the ETL process in a single operation without staging data, although specific transformation or operational requirements, or indeed hardware may be a hindrance. Nevertheless, for maximum performance, the architecture avoids staging. SSIS even avoids copying the data in memory as far as possible. This is in contrast to traditional ETL tools, which often require staging at almost every step of the warehousing and integration process. The ability to manipulate data without staging extends beyond traditional relational and flat file data and beyond traditional ETL transformation capabilities. With SSIS, all types of data (structured, unstructured, XML, etc.) are converted to a tabular (columns and rows) structure before being loaded into its buffers. Any data operation that you can apply to tabular data, you can also apply to the data at any step in the data-flow pipeline. This means that a single data-flow pipeline can integrate diverse sources of data and perform arbitrarily complex operations on this data without having to stage the data.

You should note though, that if staging is required for business or operational reasons, SSIS has good support for these implementations as well.

This architecture enables you to use SSIS in a variety of data integration scenarios, ranging from traditional DW-oriented ETL to nontraditional information integration technologies.

ADO.NET connectivity

A significant aspect of an integration services solution is the extraction or loading of data. It is therefore important that your integration solution can connect seamlessly to a wide range of data sources to make the most of the performance and reliability benefits brought by a comprehensive data access platform. SQL Server 2008 Integration Services is optimized for ADO.NET connectivity (previous versions were optimized for OLE DB or ODBC.) The move to ADO.NET improves system integration and third party support. SQL Server 2005 Integration Services used OLE DB for important tasks such as lookups, but now you can use ADO.NET for tasks as well as source and destination components.

Thread pooling

As an integration solution is scaled up, it often reaches a plateau beyond which performance improvements are difficult to achieve. SQL Server 2008 Integration Services breaks through this limitation by sharing threads among multiple components, which increases parallelism and reduces blocking; and therefore increases performance in large highly parallel, multiple-processor, multiple-core systems.

As well as increasing performance on most systems, thread pooling also reduces the need for manual configuration of SSIS packages to increase parallelism, and therefore increased developer productivity.

Persistent lookups

Performing lookups is one of the most common operations in an integration solution. This is especially prevalent in data warehousing where fact records use lookups to transform business keys to their corresponding surrogates. SQL Server 2008 Integration Services increases the performance of lookups to scale to meet the largest tables.

You can configure Lookup transformations to cache some, or all, of the reference data before the input column is processed. SQL Server 2008 Integration Services can load a full cache from any source and allows the cache to be greater than 4 GB, even on a 32-bit operating system. By using a partial cache, SQL Server 2008 Integration Services pre-charges the Lookup by using the data flow. Partial caches support OLEDB, ADO.Net, and ODBC for database lookups, and they track lookup hits and misses. If you choose not to pre-cache reference data, SQL Server 2008 Integration Services supports batched database calls and case-insensitive matches.

Integration Scenarios

SSIS for data transfer operations

Although SQL Server 2005 Integration Services was a much more functional and powerful product than previous versions, many users found that simple data transfers by using the wizard was more complex and less functional. SQL Server 2008 Integration Services has an improved wizard that uses ADO.NET, has an improved user interface, performs automatic data type conversions, and is more scalable than previous versions.

Figure 2

SSIS for data warehouse loading

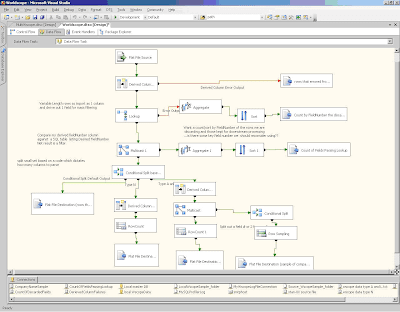

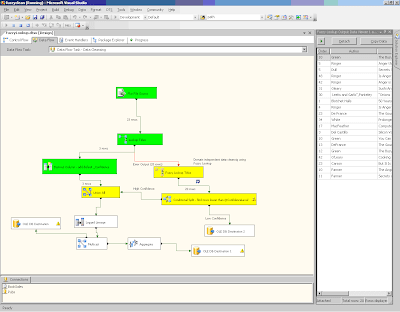

At its core, SSIS is a comprehensive, fully functional ETL tool. Its functionality, scale, and performance compare very favorably with high-end competitors in the market at a fraction of their cost. The data integration pipeline architecture allows it to consume data from multiple simultaneous sources, perform multiple complex transformations, and then land the data to multiple simultaneous destinations. This architecture allows SSIS to be used not only for large datasets, but also for complex data flows. As the data flows from source(s) to destination(s), you can split, merge, and combine the stream of data with other data streams, and otherwise manipulate it. Figure 3 shows an example of such a flow.

Figure 3

SQL Server 2008 includes support for Change Data Capture (CDC), which you can use to record insert, update, and delete activity in SQL Server tables, and make the details of the changes available in an easily consumed relational format. You can take advantage of CDC when implementing an ETL solution with SQL Server 2008 Integration Services to ensure that only changed data is included in the extraction process, which eliminates the overhead of performing a full data refresh that includes unchanged data in each ETL operation.

SSIS can consume data from (and load data into) a variety of sources including managed (ADO.NET), OLE DB, ODBC, flat file, Microsoft Office Excel®, and XML by using a specialized set of components called adapters. SSIS can even consume data from custom data adapters (developed in-house or by third parties) so you can wrap legacy data loading logic into a data source that you can then seamlessly integrate into the SSIS data flow. SSIS includes a set of powerful data transformation components that allow data manipulations that are essential for building data warehouses. These transformation components include:

• Aggregate. Performs multiple aggregates in a single pass.

• Sort. Sorts data in the flow.

• Lookup. Performs flexible cached lookup operations to reference datasets.

• Pivot and UnPivot. Two separate transformations pivot and unpivot data in the flow.

• Merge, Merge Join, and UnionAll. Can perform join and union operations.

• Derived Column. Performs column-level manipulations such as string, numeric, and date/time operations, and code page translations. This one component wraps what other vendors might break up into many different transformations.

• Data Conversion. Converts data between various types (such as numeric and string).

• Audit. Adds columns with lineage metadata and other operational audit data.

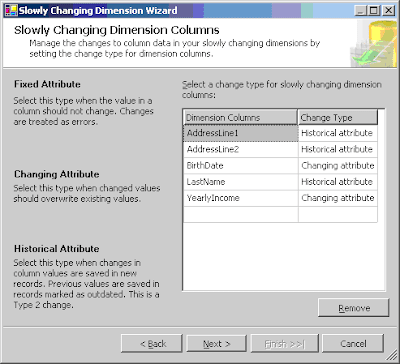

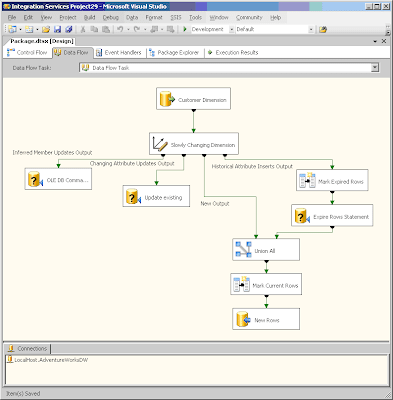

In addition to these core data warehousing transformations, SSIS includes support for advanced data warehousing needs such as Slowly Changing Dimensions (SCD). The SCD Wizard in SSIS guides users through specifying their requirements for managing slowly changing dimensions and, based upon their input, generates a complete data flow with multiple transformations to implement the slowly changing dimension load. Support for standard Type 1 and 2 SCD along with two new SCD types (Fixed Attributes and Inferred Members) is provided. Figure 4 shows a page from the SCD Wizard.

Figure 4

Figure 5 shows the data flow that is generated by this Wizard.

Figure 5

You can also use SSIS to load Analysis Services multidimensional OLAP (MOLAP) caches directly from the data-flow pipeline. This means that not only can you use SSIS to create relational data warehouses, but you can also use it to load multidimensional cubes for analytical applications.

SSIS and Data Quality

One of the key features of SSIS, as well as its ability to integrate data, is its ability to integrate different technologies to manipulate the data. This has allowed SSIS to include innovative “fuzzy logic”–based data cleansing components. The Microsoft Research labs developed these components and they represent the latest research in this area. The approach taken is a domain independent one and does not depend upon any specific domain data, such as address/zip reference data. This allows you to use these transformations for cleansing most types of data, not just address data.

SSIS deeply integrates with the data mining functionality in Analysis Services. Data mining abstracts out the patterns in a dataset and encapsulates them in a mining model. You can then use this mining model to make predictions on what data belongs to a dataset and what data may be anomalous. So you can use data mining as a tool for implementing data quality.

Support for complex data routing in SSIS helps you to not only identify anomalous data, but also to automatically correct it and replace it with better values. This enables “closed loop” cleansing scenarios. Figure 6 shows an example of a closed loop cleansing data flow.

Figure 6

In addition to its built-in data quality features, SSIS can be extended to work closely with third-party data-cleansing solutions.

Application of SSIS Beyond Traditional ETL

The ability of the data-flow pipeline to manipulate almost any kind of data, the deep integration with Analysis Services, the support for extending it with a large variety of data manipulation technologies, and the inclusion of a rich work-flow engine allow SSIS to be used in many scenarios that are not traditionally thought of as ETL

Service Oriented Architecture

SSIS includes support for sourcing XML data in the data-flow pipeline, including data both from files on disk as well as URLs over HTTP. XML data is “shredded” into tabular data, which then can be easily manipulated in the data flow. This support for XML can work with the support for Web services. SSIS can interact with Web services in the control flow to capture XML data.

You can capture XML from files, from Microsoft Message Queuing (MSMQ), and over the Web via HTTP. SSIS enables the manipulation of the XML with XSLT, XPATH, diff/merge, etc. and can stream the XML into the data flow.

This support enables SSIS to participate in flexible Service Oriented Architectures (SOA).

Data and Text mining

SSIS not only has deep integration with the data mining features from Analysis Services, but it also has text mining components. Text mining (also referred to as text classification) involves identifying the relationship between business categories and the text data (words and phrases). This allows the discovery of key terms in text data and, based upon this, to identify text that is “interesting” automatically. This in turn can drive “closed-loop” actions to achieve business goals such as increasing customer satisfaction and enhancing the quality of the products and services.

On-Demand Data Source

One of the unique features in SSIS is the DataReader destination, which lands data into an ADO.NET DataReader. When this component is included in a data-flow pipeline, you can use the package that contains the DataReader destination as a data source, exposed as an ADO.NET DataReader itself. So you can use SSIS not only as a traditional ETL to load data warehouses, but also as a data source that can deliver integrated, reconciled, and cleansed data from multiple sources on-demand. For example, you might use this to help Reporting Services to consume data from multiple diverse data sources by using a SSIS package as its data source.

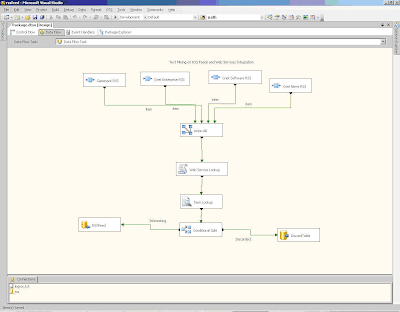

A possible scenario that integrates all of these features consists of identifying and delivering interesting articles from RSS feeds as part of a regular report. Figure 7 shows a SSIS package that sources data from RSS feeds over the Internet, integrates with data from a Web service, performs text mining to find interesting articles from the RSS feeds, and then places the interesting articles into a DataReader destination to be finally consumed by a Reporting Services report.

Figure 7

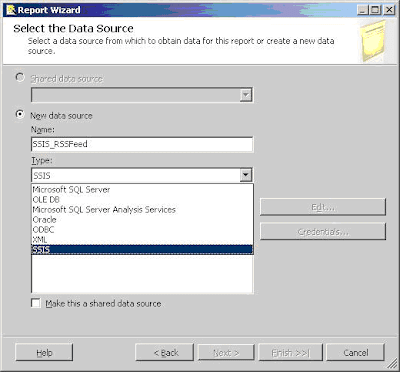

Figure 8 shows the use of the SSIS package as a data source in the Report Wizard.

Figure 8

From an ETL tool perspective, this scenario is very unusual because there is no data extraction, transformation, or loading.

SSIS, the Integration Platform

SSIS goes beyond being an ETL tool not only in terms of enabling nontraditional scenarios, but also in being a true platform for data integration. SSIS is part of the SQL Server Business Intelligence (BI) platform that enables the development of end-to-end BI applications.

Integrated Development Platform

SQL Server Integration Services, Analysis Services, and Reporting Services all use a common Microsoft Visual Studio® based development environment called the SQL Server Business Intelligence (BI) Development Studio. BI Development Studio provides an integrated development environment (IDE) for BI application development. This shared infrastructure enables metadata-level integration between various development projects (integration, analysis, and reporting). An example of such shared construct is the Data Source View (DSV), which is an offline schema/view definition of data sources, and is used by all three BI project types.

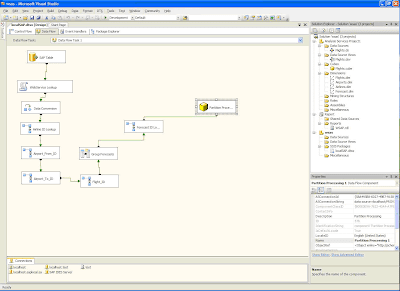

This IDE provides facilities such as integration with version control software (e.g., VSS) along with support for team-based features such as “check-in/check-out” and as such it fulfills the need for an enterprise-class team-oriented development environment for business intelligence applications. Figure 9 shows a BI Development Studio solution that consists of Integration, Analysis, and Reporting projects.

Figure 9

Not only does this provide a single place to develop BI applications, but it also can be used to develop other Visual Studio projects (using Visual C#®, Visual Basic® .NET etc.) and so can provide developers with a true end-to-end development experience.

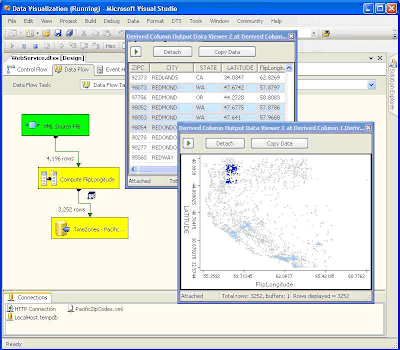

Besides an integrated BI development environment, BI Development Studio has features for true run-time debugging of SSIS packages. These include the ability to set breakpoints and support for standard development constructs such as watching variables. A truly unique feature is the Data Viewer, which provides the ability to view rows of data as Integration Services processes them in the data-flow pipeline. This visualization of data can be in the form of a regular text grid or a graphical presentation such as a scatter plot or bar graph. In fact, it is possible to have multiple connected viewers that can display the data simultaneously in multiple formats. Figure 10 shows an example of geographic data visualized using a scatter plot and a text grid.

Figure 10

Programmability

In addition to providing a professional development environment, SSIS exposes all its functionality via a set of rich APIs. These APIs are both managed (.NET Framework) and native (Win32) and allow developers to extend the functionality of SSIS by developing custom components in any language supported by the .NET Framework (such as Visual C#, Visual Basic .NET, etc.) and Visual C++. These custom components can be workflow tasks and data-flow transformations (including source and destination adapters). This allows legacy data and functionality to be easily included in SSIS integration processes, allowing you to use past investments in legacy technologies effectively. It also allows easy inclusion of third-party components.

Scripting

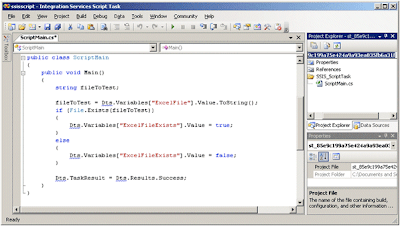

The extensibility previously mentioned is not only limited to re-usable custom components but also includes script-based extensibility. SSIS has script components both for task flow as well as for data flow. These allow users to write scripts in Visual Basic. NET to add ad hoc functionality (including data sources and destinations) and to re-use any preexisting functionality packaged as .NET Framework assemblies.

SQL Server 2008 includes Visual Studio Tools for Applications, which provides a scripting environment in which you can use Visual Basic .NET, or C# to implement script components.

Figure 11 shows an example of a script that checks for the existence of an Office Excel file.

Figure 11

This extensibility model makes SSIS not only a data integration tool, but also an Integration Bus into which technologies like data mining, text mining, and Unified Dimensional Model (UDM) can easily be plugged in to enable complex integration scenarios involving pretty much arbitrary data manipulation and structures.

Monday, October 3, 2011

SQL Server Integration Services (SSIS)

Overview

SQL Server Integration Services (SSIS) is a tool that we use to perform ETL operations; i.e. extract, transform and load data. While ETL processing is common in data warehousing (DW) applications, SSIS is by no means limited to just DW; e.g. when you create a Maintenance Plan using SQL Server Management Studio (SSMS) an SSIS package is created. At a high level, SSIS provides the ability to:

- retrieve data from just about any source

- perform various transformations on the data; e.g. convert from one type to another, convert to uppercase or lowercase, perform calculations, etc.

- load data into just about any source

- define a workflow

The first version of SSIS was released with SQL Server 2005. SSIS is a replacement for Data Transformation Services (DTS) which was available with SQL Server 7.0 and SQL Server 2000. SSIS builds on the capabilities introduced with DTS.

Our high level outline is as follows:

- Creating SSIS packages with SQL Server Management Studio (SSMS)

- Business Intelligence Development Studio (BIDS)

- Creating a simple SSIS package in BIDS

- Deploying SSIS packages

- Executing SSIS packages

Mixed Content: The page at xxx was loaded over HTTPS, but requested an insecure

Mixed Content: The page at ' https ://www.test.com/signup.aspx' was loaded over HTTPS, but requested an insecure script ' http ...

-

State management is a critical thing to master when coming to Web world from a desktop application perspective. Session is used to sto...

-

Internet Information Services (IIS) 7 and above provides a request-processing architecture that includes: The Windows Process Activati...

-

INFORMATION_SCHEMA view that you could use is PARAMETERS. This query will list all stored procedures that have such a parameter: SELECT ...